Introduction

Fintech feels instant: you tap “Pay”, and a few hundred milliseconds later funds appear on the other side. Behind that effortlessness sits very physical engineering -dedicated servers, geo‑distributed VPS clusters, encrypted pathways, and high‑throughput networking. If any link wobbles, you get timeouts, retries, duplicate charges, broken 3‑D Secure flows, and support queues bursting at the seams. The honest principle is simple: your money moves because the servers do. This article is a practical map for CTOs, payments architects, SREs, and compliance leads on how to build that reality on Unihost: dedicated nodes for the payments heart, elastic VPS clusters for API and supporting services, protected channels, and wide, predictable pipes.

Market Trends

Money now travels at the speed of expectations. Several vectors shape those expectations: – Instant rails & 24/7 settlement. Faster Payments, SEPA Instant, RTP, and domestic instant schemes push the standard from minutes to seconds. – Open Banking / Open Finance. Banks are becoming API providers; fintech teams orchestrate dozens of integrations behind a unified normalization and routing layer. – Multi‑instrument reality. Cards, A2A (account‑to‑account), alternative wallets, BNPL, QR acquiring, “card‑on‑fileless” subscriptions -users expect all of them to behave consistently. – AI/ML in antifraud and personalization. Real‑time scoring at millions of events per hour, behavioral profiles, graph features, and explainability, all under production API latency budgets. – Regulation & privacy. PCI DSS, PSD2/PSD3, GDPR, AML/KYC, SCA/3‑D Secure 2.x. Requirements tighten; penalties increase.

Across all these, infrastructure is the product. Conversion, risk, cost per transaction, and brand trust depend directly on it.

Industry Pain Points

Latency and jitter

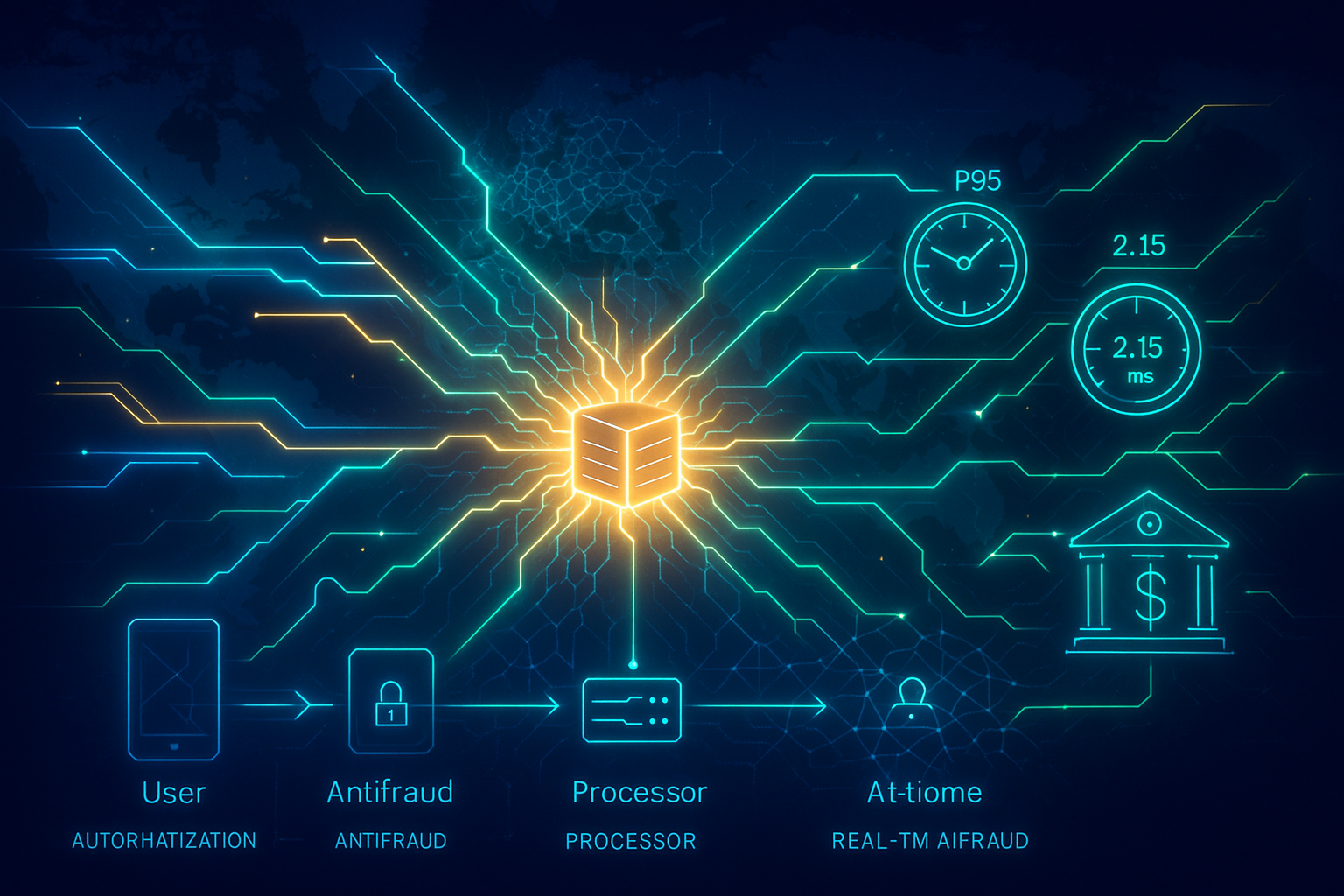

A payment flow is a chain of 6–20 hops: device → merchant site/app → payment gateway → antifraud → processor → issuer/scheme → callbacks/webhooks. Any micro‑delay at any segment (CPU queues, noisy neighbors, slow storage) multiplies along the chain, ending in timeouts, retries, double charges, and a flood of support tickets.

Narrow pipes

Acquiring peaks and seasonal sales are not “+10%”. They are QPS multipliers. If your data path crosses a bottleneck (SATA storage, weak load balancers, over‑taxed region), antifraud windows lag, decisions are made on partial features, false positive/negative rates jump.

Geography and availability

Users are global and impatient. A single data center is a SPOF in a domain where downtime is painfully expensive. You need multi‑region footprints, replication, health checks, and ingress that always prefers the closest healthy edge (Anycast).

Security and compliance

PCI zones, segmentation, encryption at rest and in transit, keys, HSM, audit trails, retention rules -they must be designed in. “We’ll add it later” inevitably becomes “we have to redo everything”.

Operations and the human factor

Most incidents are not exotic bugs. They come from uncoordinated change: a patch during peak, a schema migration without draining queues, enabling heavy metrics on prod. Cure: SRE discipline -canaries, limit gates, feature flags, postmortems.

Technological Solution: a Payments Platform on Unihost

The platform consists of four layers that act as one organism.

1) Dedicated servers: the heart of clearing and authorization

The critical core -authorization, clearing, the transaction journal (ledger), tokenization, session management, storage of sensitive artifacts, and HSM/KMS integrations -runs on clean bare metal. – Hardware‑level isolation. No neighbors, no oversubscription. Stable CPU clocks and predictable I/O. – NVMe RAID for journals and queues -minimal latency, high IOPS, fast recovery from snapshots. – High‑clock CPUs with large L3 -crypto, serialization, and routing without context‑switch churn. – IPMI / out‑of‑band control -safe patching and diagnostics without touching production traffic. – PCI segmentation. Private VLANs, ACLs at boundaries, separate subnets for PAN/Token hosts.

2) Geo‑distributed VPS clusters: elasticity and proximity

Microservices, API gateways, storefronts, notifications, reporting, RUM/analytics, and online scoring thrive on VPS clusters. – Fast scaling. Spin up replicas for a campaign; scale back when the spike ends. – Close to the user. Regional fronts cut TTFB and reduce 3‑D Secure drops. – Kubernetes/Nomad. Autoscaling on QPS and queue depth, deployment policies, namespace isolation.

3) Protected channels: the silence of money

- IPsec/GRE over IPsec between regions; private L2 links where required.

- mTLS for service‑to‑service with independent trust roots, automatic cert rotation, explicit east‑west policies.

- WAF/Anti‑DDoS/Rate limiting at public ingress, plus GeoIP policies and device fingerprinting.

4) High‑throughput networking

- 25G/40G/100G per node for dense services and antifraud pipelines.

- L4/L7 load balancing with health checks and session affinity when necessary.

- Anycast for handshake RTT minimization and resilient regional failover.

How It Works End‑to‑End

A) Data and events

Every action becomes an event: payment attempt, 3DS challenge, refund, chargeback, payout. Events land in a bus (Kafka/Redpanda) with normalized schema and strict retention. Idempotency keys guard against duplicates; deduplicators drop defensive retries. Critical topics get their own partitions and priorities.

B) Applications and logic

- Core on dedicated. Authorization, clearing, the ledger, tokenization, limits, fee calculation -anything latency‑ and integrity‑sensitive.

- Edge on VPS. Fronts, API gateways, online antifraud, notifications, reporting, support search/RAG.

- 3‑D Secure 2.x. Lean challenge pages with strict CSP/SRI at the edge; crypto and ACS integrations in the core.

C) Antifraud and ML

Decisions must fit into tens of milliseconds. The stack is hybrid: graph features (device/card/address relationships), tree/boosting for baseline scoring (fast, explainable), LSTM/Transformer for event sequences. Feature stores are replicated per region; hot features live in Redis. For interpretability use SHAP/feature importance. Some “hard cases” are escalated to asynchronous reconsideration.

D) Ledger, settlement, and reconciliation

Financial truth lives in the ledger: double‑entry, immutable events, strict numbering, checksums. Reconciliations with banks/PSPs run in batches; discrepancies auto‑open incidents with playbooks. For cross‑border flows add currency files, FX rates, hedging, and fee allocation.

E) Observability and SLOs

- Metrics: P50/P95/P99 per hop; TTFB for fronts; 3DS durations; auth error codes; queue depth; retry/abort ratios.

- Tracing: end‑to‑end correlation IDs from browser/SDK through the core and back to webhooks; dependency maps highlighting hot spots.

- SLOs:95–99.99% per region; error budgets for 3DS/RTT tails; alerts keyed to jitter anomalies.

Architecture in Practice

Data plane

- PostgreSQL/Timescale for transactions and telemetry with read replicas for reporting.

- Object storage for reports, archives, snapshots.

- Redis/Memcached for session/token caches and hot features with thought‑out TTL and eviction.

Event plane

- Kafka/Redpanda topics for auth, refunds, antifraud, webhooks, notifications; retention policies; cross‑region replication; deduplication.

Application plane

- Dedicated: auth, clearing, tokenization, limits, fee logic, integrations with banks/schemes.

- VPS: fronts, APIs, merchant portals, reporting, online antifraud, outbound notifications.

Security & network

- Anycast ingress + L7 LB.

- Private VLANs, IPsec, mTLS, WAF/Anti‑DDoS.

- Secrets management, HSM/KMS, audit trails.

DR/BCP

- Warm‑standby replication; regular failover drills.

- Runbooks for regional loss, link degradation, jitter spikes, API attacks.

Step‑by‑Step Scenario: Launching A2A & P2P

Goal. Instant account‑to‑account transfers with personalized limits, two regions, 99.95% availability.

- Idempotency & journal. Generate keys client‑side and verify in the core; journals on NVMe RAID; snapshots and PITR enabled.

- Network path. Anycast routes users to the nearest region; IPsec ties regions; inside the region use a service mesh with mTLS and strict ACLs.

- Authorization/clearing on dedicated; limits/profiles there as well; scoring on VPS clusters with autoscaling; retries with exponential backoff and dedup.

- Daily batches with banks/PSPs; divergences open tickets automatically.

- Dashboards per hop and segment; alerts on P95/P99 growth, jitter, queue depth; incident postmortems.

- Weekly exercises: DNS/Anycast shift, idempotency preservation, draining queues.

Expected outcome. Median latencies 150–200 ms, P95 under 350–500 ms for short flows; zero lost transactions by journal; resilience under peaks and network degradation.

Regulatory Matrix for a Fintech Platform

Fintech products live under regulation. To move fast without fines, think in a matrix of data flows × regulatory domains.

PCI DSS 4.0. Strictly defines zones where PAN/Track data can be touched. For product teams that means: (1) minimize PCI scope; (2) place fronts and all non‑PAN services outside PCI scope; (3) enforce network‑level segmentation (VLAN/ACL), MFA for admin access, end‑to‑end encryption in transit and at rest, and pervasive change logs. On Unihost, this becomes dedicated subnets, private VLANs, separate servers for the core, and centralized key management (HSM/KMS integration).

PSD2/PSD3 + SCA/3‑D Secure 2.x. Strong Customer Authentication, dynamic linking, sealed redirects, consistent error handling. Keep Anycast ingress and regional fronts -any network “chatter” hurts SCA completion.

GDPR & local data laws. Data minimization and localization, lifecycle control, right to erasure. Separate hot operational journals from analytics; store tokens/identifiers instead of raw PII whenever possible.

AML/KYC/sanctions lists. Orchestrate verification providers with timeouts and caching; log decisions for reproducibility in disputes. VPS clusters scale KYC during onboarding spikes.

Network Patterns and Default Encryption

TLS 1.3 + HSTS + OCSP stapling. Modern cipher suites, minimum RTT in handshakes, forced HTTPS. Combine L4 for simple flows with L7 for smart routing/policy.

QUIC/HTTP‑3 for mobile fronts mitigates loss and jitter. Preconnect and early‑hints speed up critical asset fetches for payment widgets.

mTLS and service mesh. End‑to‑end service authentication with distinct trust roots per env; automated rotation; declarative east‑west policies.

Anycast + geo. Ingress terminates close to the user, reducing 3DS and auth failures. With regional degradation, Anycast collapses traffic into the nearest healthy region without client DNS churn.

Antifraud: Millisecond‑Budget Decisions

Fraud patterns evolve faster than releases; your design must blend predictiveness and explainability.

Signals & features. Device/browser IDs, graph links (card‑address‑device), velocity of IP/geo change, behavioral cadence, form‑fill quality, refund/chargeback history, bot markers. Hot features in Redis; cold features in analytics.

Models. Gradient boosting/trees for baseline scoring (fast, explainable); LSTM/Transformer for sequential event patterns; basic graph metrics (PageRank‑like) or offline GNNs with online refresh.

Rules in tandem. White‑listed trusted merchants/cards, black‑listed patterns, amount/frequency caps. The decision is an ensemble: models yield probability; rules enforce hard constraints and carve‑outs.

Two loops. Online loop with a 10–30 ms budget; asynchronous re‑scoring for gray cases. For ambiguous decisions, place a hold, notify the user, and push to manual review.

Observability: What to Actually Measure

Means are deceptive; tails tell the truth. Measure distribution tails and causal chains.

- P50/P95/P99 per hop (front→gateway, gateway→core, core→bank, bank→webhooks). Separate SLOs for 3DS challenges vs auto‑payments.

- Front TTFB and payment widget critical asset timing (JS/CSS). CSP/SRI doubles as protection and supply‑chain health signal.

- Queues & retries. Depth per topic/queue; retry share and outcomes. If retries exceed X%, flip limit gates.

- FP/FN rates in antifraud with business impact (lost revenue vs fraud losses).

- Saturation metrics CPU/L3/NUMA/IO in the core; when caches spill, load curves kink.

Economics: Cost of a Successful Transaction

Let (C_i) be infra/month, (C_{inc}) incident cost (penalties, SLA credits, team hours), (C_{ops}) support/retries, (F) fraud/chargeback losses, and (N_{success}) successful payments. Then:

[ C_{tx} = . ]

Engineering aims to raise (N_{success}) (conversion) and reduce the numerator. A predictable dedicated core, elastic VPS edge, and fast networks/storage reduce jitter, timeouts, and retries -pushing down (C_{ops}) and (C_{inc}). Better antifraud reduces (F). Over months, ROI improves.

Incident Playbooks: If…Then

Case A: inter‑region link degradation. Symptoms -rising P95/P99 on gateway→core hops; 3DS completion dips. Actions: prioritize queues, shift heavy services to a healthy region via Anycast, temporarily narrow WAF geo policies, enable degraded‑mode pages.

Case B: holiday‑driven fraud spike. Symptoms -higher issuer declines, chargebacks accelerating with lag. Actions: tighten rule thresholds, enable re‑scoring of gray transactions, raise online scoring budget within safe limits, expand scoring clusters.

Case C: core CPU saturation at peak. Symptoms -CPU run queues spike, front TTFB climbs. Actions: vertical scale the core, pause non‑critical batches, activate read‑replicas for reports, pre‑separate loads.

Testing & Acceptance

Load tests. Model realistic mixes: 3DS share, refunds share, P2P share, amount/currency distribution. Watch tails; plan capacity using Little’s law with a 30–40% headroom.

Chaos/DR drills. Planned link downs, region blackout, KYC provider outages, database degradation. Goal: the customer sees “slightly slower”, not “down”.

Security checks. Pen‑test payment widgets, DOM/SDK manipulation attempts, CSP validation, secrets rotation, MFA on privileged access.

Capacity Planning

QPS → cores. Tokenization/crypto and JSON marshaling are CPU‑intensive. Estimate cycles per request × target QPS; add ×1.3–1.5 headroom for tails.

Network. Throughput for webhooks, logs, antifraud feeds. For short‑leg domestic flows 25G is minimum; for multi‑region cores use 40G/100G.

Storage. Journals on NVMe RAID; hot indexes warm; plan IOPS by 99th percentile, not averages; snapshots without prod impact.

Migration to Unihost: Checklist

- Catalog services & dependencies; move fronts and non‑PCI workloads to VPS clusters.

- Pilot region near current traffic sources; dual‑write journals during transition; verify consistency.

- Anycast ingress and geo policies; IPsec inter‑region links.

- Canary 5% → 20% → 50% → 100%; limit gates on risky services.

- Rollback playbooks; CI/CD kill‑switch; peak‑season freeze windows.

- PCI zone docs; audit artifacts (change management, accesses, logs) registered from day one.

Executive Summary

- Objective: minimize cost per successful transaction while maximizing conversion with controlled risk.

- Means: dedicated core + geo VPS clusters + protected channels + high‑throughput networking.

- Effect: lower latency/jitter → fewer retries → higher conversion; elastic capacity → no “paying for peak” all year; SRE/observability → fewer incidents and faster recovery.

Conclusion

Fintech is about trust and speed. When infrastructure is right, the “instant money” magic becomes routine: users see a smooth UX, merchants get funds without surprises, teams sleep at night. When money moves -it moves inside Unihost: dedicated servers keep the core’s pulse steady, geo‑distributed VPS accelerates the edge, protected channels maintain silence and privacy, and wide networks give antifraud and telemetry room to breathe.

Try Unihost servers -stable infrastructure for your fintech projects.

Order a dedicated server or a geo‑distributed VPS cluster on Unihost -and let your money move as fast as your ideas.